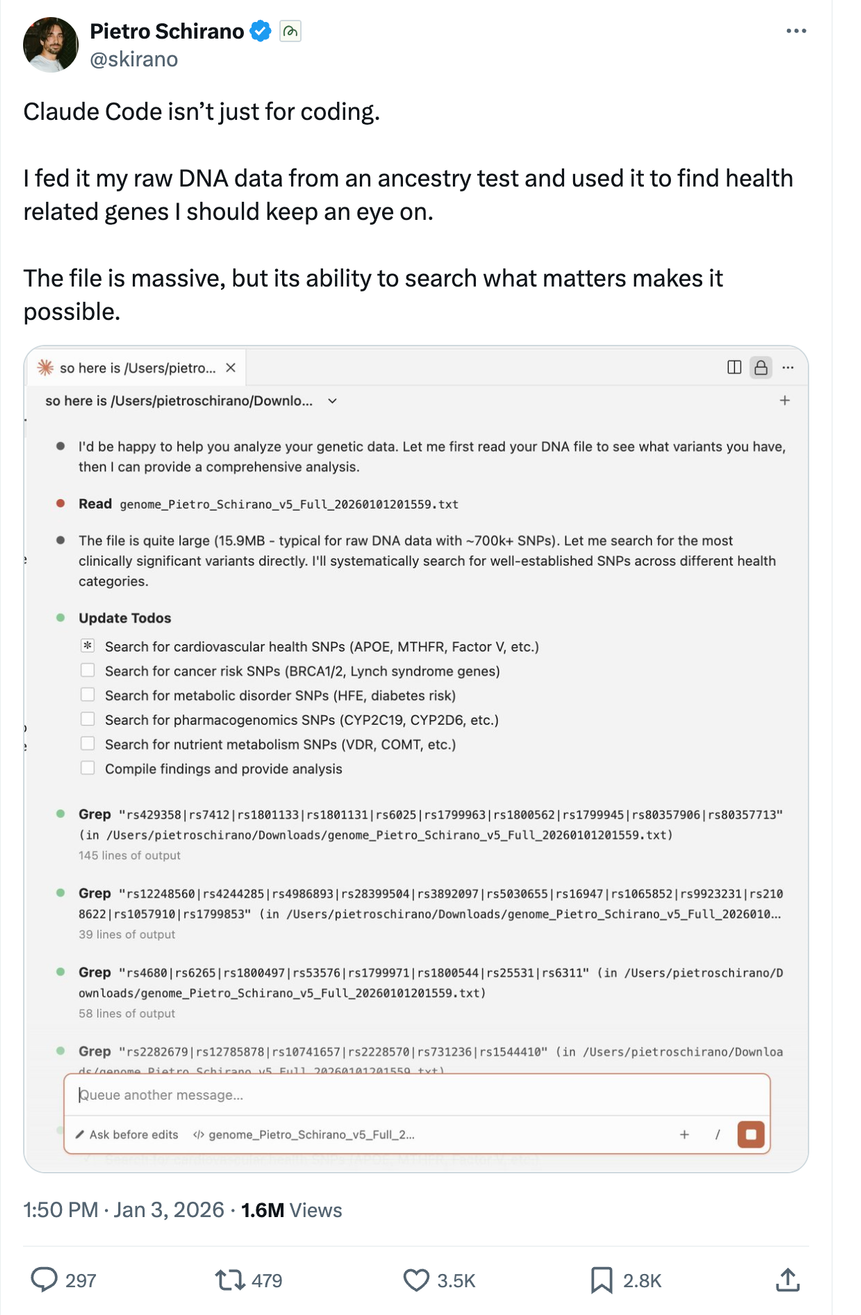

When a Developer AI Tool Is Used for Genetic Interpretation

A public example shows a developer AI tool being used to analyze raw DNA data for health-related insights—highlighting how general-purpose systems can enable sensitive inference outside regulated medical contexts.

An observed use of a developer AI tool highlights how general-purpose systems can be repurposed in ways that challenge existing assumptions about risk classification and oversight.

A recent public account describes a developer-focused AI tool being used to analyze raw human DNA data, illustrating how general-purpose systems can be applied to generate health-relevant interpretations outside regulated medical contexts.

What was observed

Who described the use:

A user publicly documented their experience using Claude Code, Anthropic’s developer-oriented AI tool.

What occurred:

The user uploaded a raw ancestry DNA file containing genetic markers and used the system’s file-search and analysis capabilities to identify genes associated with health conditions they described as worth “keeping an eye on.”

In practical terms, a tool positioned for software development was applied to extract health-relevant insights from genetic data.

Where it occurred:

The activity took place entirely within a general-purpose developer AI environment, not within a medical, clinical, or genomics-specific platform.

When it became visible:

The behavior was described in a recent public user post. It reflects real-world usage rather than a product launch, feature update, pilot, or policy change.

No new technical capability was introduced. No policies were updated. Existing functionality was applied to a high-sensitivity data type.

How this behavior is interpreted depends on how governance frameworks increasingly assess AI systems based on enabled capability, rather than stated purpose.

🔒 Subscriber access required beyond this point

The following sections examine how this use case is likely to be interpreted across governance, security, healthcare compliance, and regulatory oversight.